Which are the smartest AI models, by IQ?

Artificial intelligence is built to be intelligent, no question, and certain AI models rival or are more intelligent than humans. At the same time, compared among themselves, some are smarter than others.

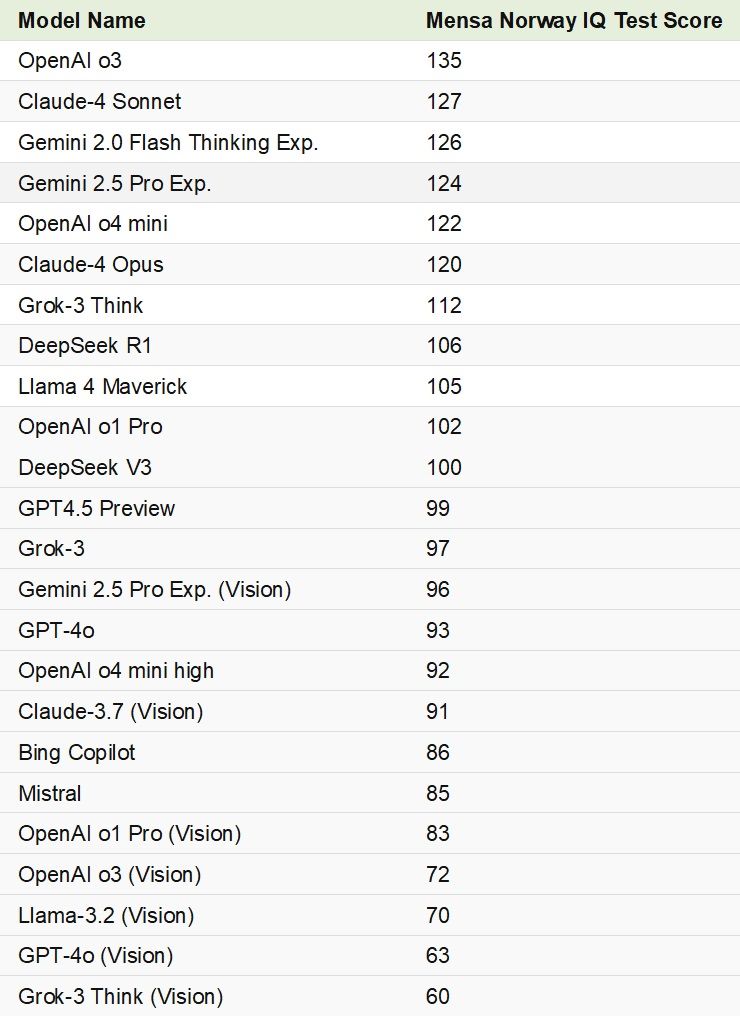

A new analysis from Tracking AI offers insight into the intellectual prowess of today’s top AI models, ranking them based on their scores on the Mensa Norway IQ test — a challenging exam typically used to assess high-level human reasoning skills.

More to read:

Researchers warn about our planet’s takeover by AI in less than a decade

According to the obtained data, OpenAI’s text-only model o3 leads the pack with an IQ score of 135, placing it firmly in the “genius” category. For reference, the average human IQ falls between 90 and 110, and scores above 130 are considered indicative of genius-level intelligence.

Following closely behind are Anthropic’s Claude-4 Sonnet with a score of 127, and Google’s Gemini 2.0 Flash Thinking at 126. Other high performers include Gemini 2.5 Pro and OpenAI’s o4 mini, both exceeding an IQ of 120, placing them above the average human range.

Interestingly, all of the top 10 highest-scoring models are text-only, lacking the ability to interpret visual input such as images or video. This suggests that, at least for now, AI excels far more in verbal reasoning and linguistic tasks than in visual processing.

More to read:

AI replacement at routine human tasks fails miserably

At the lower end of the IQ spectrum, multimodal models — which can analyze both text and images — performed significantly worse. OpenAI’s GPT-4o (Vision) scored just 63, and xAI’s Grok-3 Think (Vision) trailed with a score of 60, both well below the human average.

China’s DeepSeek is on the 8th spot, with a score of 106.

These findings underscore the evolving cognitive capabilities of AI. While some models now rival or surpass human intelligence in abstract reasoning and problem-solving, others still lag behind in areas like visual interpretation.

Political leanings: AI's leftward tilt

Beyond intelligence, researchers have also examined the political biases embedded within AI systems. As of late 2023, most major AI models have been identified as economically left-wing and socially libertarian, although the degree of bias varies by model.

According to the analysts, Anthropic’s Claude tends to be one of the most moderate, while Google’s Bard is cited as among the most extreme-left.

Two main factors influence these political tendencies:

More to read:

An OpenAI co-founder wants to build a bunker before releasing AGI

1. Training Data: Models trained on sources with known biases — such as Wikipedia or mainstream news outlets — may inherit those perspectives.

2. Human Feedback: AI responses are refined through reinforcement learning, often guided by human raters. The political or cultural leanings of these raters, and the evaluation criteria they use, can shape a model’s output over time.

The analysis offers a revealing snapshot of where AI stands today: not only outthinking average humans in certain tests of intelligence but also absorbing, and at times amplifying, the values of the digital environments in which they are trained.